Demystifying AI: Going Beyond the Hype

Explore the practical uses of AI in the workplace with this easy-to-understand guide that helps you move beyond the hype to real-world applications.

May 13, 2024

10 minutes

Don’t Believe the Hype

Everywhere you turn, people are talking about AI with promises that it will revolutionize how we work. Some are worried that it will jeopardize their jobs, while others are franticly rushing to adopt it and gain a competitive advantage. Meanwhile, the jargon and mystique surrounding AI leaves many with a palpable fear of being left behind.

If you ignore your worries, despite the excitement, AI is nothing new. It has been ingrained in how technology has evolved over the past three-quarters of a century. This framing is critical when considering how current advances might apply to you.

You Are Already Using AI

In 1939, when The Wizard of Oz was adapted for film, people fell in love with the Tin Man, a robot that blurred the line between human and machine. Artificial intelligence would later emerge as an academic discipline at Dartmouth in the 1950s, building upon research by engineers, psychologists, mathematicians, economists, and politicians exploring the possibility of creating “machines that can think"1.

From those early days of computer science to Deep Blue defeating Gary Kasparov at chess, the quest to make machines that can behave and think like humans has inspired generations of innovators. Sensors in machinery, facial recognition software, chatbots, recommendation engines, and even those dumb block-headed villagers in Minecraft are all examples of applied AI.

The reassuring notion that AI has been evolving as a part of the technology we live with and use daily is key to making sense of where we are now, and what opportunities exist moving forward.

Don’t Worry About the Technical Jargon

People who like to talk about AI use technical jargon that can make the topic seem overwhelming. Here’s what you need to know about AI terminology:

- Most technical terms thrown around have existed for decades, so don’t feel like they are a new thing you need to become an expert on.

- The distinctions between them matter most to people developing AI or who want to consult or speak about it on stage. They are less pertinent to people who simply want to take advantage of AI capabilities.

AI will continue to be baked into the software and devices that people who design hardware and software make, so you don’t need to know how the sausage is made to be able to incorporate it into your business strategy.

AI Explained, Simply

Okay, so you don’t need to be an expert on AI to take advantage of it, but let’s say you want to know enough to sound informed on the topic in a meeting with your boss or other executives. Here’s what’s what in a nutshell.

The Three Main Classifications of AI

There are three main levels of AI that people talk about, but since two are theoretical, only the first needs to be considered.

- Artificial Narrow Intelligence (ANI): Narrow AI is what exists today. It has incredible capabilities but is referred to as narrow or weak because although it can simulate human behavior and thinking, it requires human people to provide the data sets and program its human-like behavior.

- Artificial General Intelligence (AGI): Also referred to as strong AI, this refers to a more autonomous type of artificial intelligence that no longer relies on human programming to advance its capabilities. It would be capable of performing as well as, or better than, humans at a wide range of cognitive tasks. Technologists are divided between working toward this and warning against it. AGI is within reach (with billions of dollars being invested in it), but currently does not exist.

- Artificial Super Intelligence (ASI): ASI is a theoretical level of AI that surpasses human intelligence in every aspect. It would possess cognitive abilities far beyond those of the smartest humans and could potentially solve complex problems, invent new technologies, and make decisions at a level humans can’t comprehend. The development of ASI raises significant ethical and existential concerns regarding control, safety, and the future of humanity. Dystopian sci-fi like Battlestar Galactica, I, Robot, and The Matrix are based around this type of sentient AI.

Basic AI Terms and Technical Terms

Here are some common terms you may hear when people talk about different AI applications and what powers them.

- Generative AI: Generative AI refers to technology that can create new content, such as images, text, or even music, based on patterns it has learned from existing data. It's like having a creative assistant that can generate new ideas or content.

- Large Language Models: Large Language Models are a type of advanced Generative AI system trained on vast amounts of text data, enabling them to understand and generate human-like text. They power applications like virtual assistants and language translation services, making interactions with computers more natural and conversational.

- Predictive AI: Predictive AI uses algorithms to analyze data and make educated guesses about future outcomes. It's like having a data-science intern helping you anticipate trends, customer behavior, and market changes so you can make informed decisions.

- Machine Learning: Machine Learning Models are a subset of AI focused on teaching computers to learn from data and improve over time without being explicitly programmed. It's like teaching a child to ride a bike by letting them practice and learn from their mistakes, eventually becoming proficient.

- Neural Networks: Neural Networks are a fundamental component of AI inspired by the human brain's structure and function. They consist of interconnected nodes (neurons) organized in layers, and they excel at tasks like pattern recognition and classification, powering many AI applications from image recognition to autonomous driving.

- Deep Learning: Deep Learning is a subset of machine learning that uses neural networks with multiple layers to process and extract features from data. It's particularly effective for complex tasks like image and speech recognition, and it's driving many advancements in AI research and applications.

- Natural Language Processing (NLP): NLP is a branch of AI focused on enabling computers to understand, interpret, and generate human language. It powers applications like chatbots, sentiment analysis, and language translation, revolutionizing how we interact with technology.

Practical Adoption of AI

Despite the distinctions above, it’s not necessary to understand the nuances of each because they are so heavily intertwined and combined in the apps and software available to us. It's unlikely you'd need to make decisions at this level.

In real-world scenarios, the primary opportunities you have will be much simpler.

- AI can help you generate different types of content.

- AI can analyze data, help with forecasting, or perform tasks for you.

- AI can be used to extend or enhance your, or others’, capabilities.

As broad and simplistic as that seems, this is where most innovation occurs. Tech giants and software companies are investing billions to fund open-source tools and productized apps infused with AI-driven capabilities in these areas.

Yet despite the investment and the romantic notion of robots with a brain here to serve us, all AI still has a human dependency. There are people behind the curtain making and marketing the apps meant to appeal to people and businesses. The good news is that countless tools and apps exist and can improve the way you work. The bad news is that AI has become so deeply ingrained in technology, that the line between what does and does not constitute AI is fuzzy.

AI is Just Technology

With the lines of AI blurred, and AI embedded in most modern technology, it becomes less necessary to call it out. When we look back, prefixing everything with "AI-powered" or "AI technology" will sound as outdated as if we continued to boast "computer-powered technology". When you boil it down, it's all just technology. I suggest framing it this way to help see past the noise created by product and content marketing and see products and opportunities for what they are.

As an example, consider the volume of products marketing themselves as "AI Chatbots". Chatbots have a history of sucking, so "AI Chatbot" sounds way better, right? Here's the thing: Chatbots are basically the OG of AI! All chatbots, since the first chatbot in 1966, are a part of AI's evolution. Have the technical sophistication and capabilities of chatbots improved wildly over the past couple years? Absolutely. But labeling your product an AI chatbot is a gimmick.

"AI Chatbot" is the "Chai Tea" of tech. Stop saying it. It's redundant.

The point is that products and services are marketed as AI to get you pumped up, so look beyond the language used, and focus on the capabilities if you want to avoid being misled.

Tips for Adopting AI

With all the hype and all the noise, it can be tough to know where to start, if you want to explore how AI can help you or your organization. Here are a few tips for those interested in getting started with AI.

- Lead with problems, not solutions. Don’t search for interesting AI solutions hoping to find one you can incorporate into your business or workflow. Identify workflow pain points or business problems first, then research what technology exists and might be worth exploring.

- Set realistic expectations. Don’t expect to revolutionize your business overnight. Every capability enabled through AI comes at a cost. An investment in training, infrastructure, or content enrichment may need to occur as a prerequisite to utilizing AI-enabled technology.

- Start small, off-load to AI. Many organizations benefit immediately from AI by starting small. Before attempting to overhaul business processes and implement AI org-wide, consider how individuals can incorporate it. Use it to offload tasks, expedite time-intensive activities, improve quality, and boost productivity. Generative and Predictive AI excels at tasks and can free up peoples' time for work that requires more judgment and critical thinking.

- Learn to prompt, review, and re-prompt. I would not trust Alexa to watch my children and you should not trust AI to write your blog for you. AI is people programming computers to talk to other computers and produce content and insights that computers think humans want. Developing your skill at creating quality prompts is essential, as is being able to interpret and edit the content or insights produced by AI.

- Consult with others. Problems and solutions are prevalent. Research how other organizations have overcome challenges similar to the ones you face, using AI or other advanced technology. Consult with experts who have experience enabling AI capabilities. Ask ChatGPT3 or Google Gemini, formerly Bard, to recommend use cases specific to your context.

Move from Hype to a Healthy Sense of Urgency

Advances in artificial intelligence have opened up a lot of new ways for technology to help us work smarter and faster. It has expanded our capabilities by augmenting our abilities and how we interact with information in the physical and digital world. AI has become so pervasive that the hype will soon become a basic expectation (the Kano Model)4.

But until then, there’s a golden opportunity for leaders who manage teams and products. Getting executives to invest in new technology, especially when they don’t fully understand it, is a common obstacle. It can be frustrating when roadblocks prevent funding for projects you know can benefit your business. But hype cycles can change that dynamic.

Organizations in a reactionary mode during a hype cycle5 can let fear of getting left behind result in reckless spending. But with an understanding of the landscape and the options and opportunities that exist, you can guide that energy (and the impulse to spend) in a constructive direction. You can transform the frantic anxiety into a healthy sense of urgency. Ideally, as a result, the timing is right, and you succeed in getting buy-in and support for your strategic digital initiatives.

Notes:

- In 1950, Alan Turing published his seminal paper, "Computing Machinery and Intelligence". He is one of the most influential thought-leaders of his time, and his work continues to inspire generations. Learn more about him at the Alan Turing Institute.

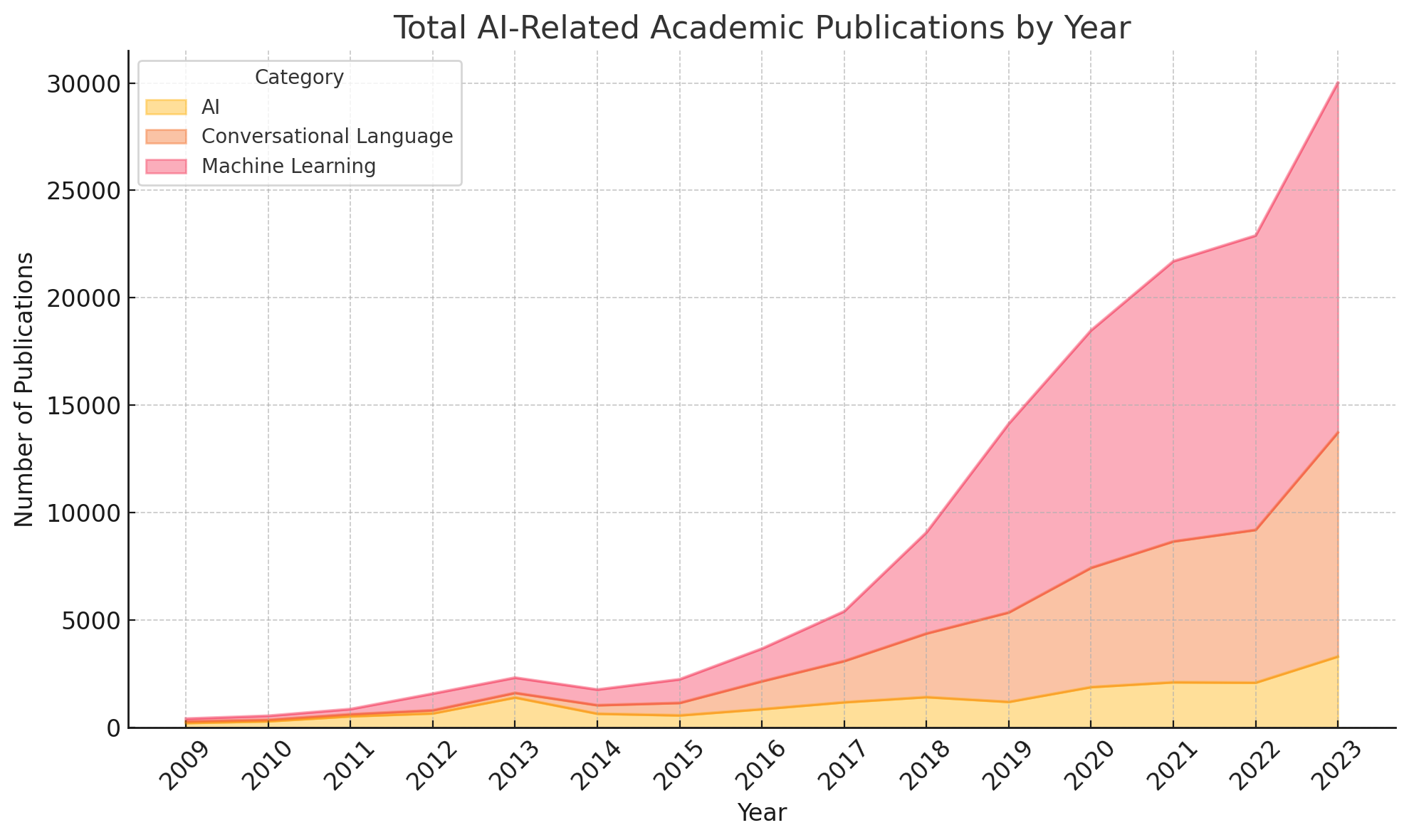

- Here's a link to the ArXiv publication data. ArXiv is a free distribution service and an open-access archive for nearly 2.4 million scholarly articles in the fields of science and technology. The graph itself was made by exporting the data from ArXiv and directing AI to analyze the data points and create a data visualization.

- GPT (as used in ChatGPT) is an acronym for Generative Pre-Trained Transformer. Chat GPT is an example of a tool that is built upon a wide array of AI technology.

- The Kano Model evolved as a way to map how new and differentiating features become a basic customer expectation over time.

- The AI Hype Cycle is Distracting Companies is an article by HBR describing how excitement, misunderstanding, and false expectations surrounding AI are impacting business operations.