AI Beyond the Screen

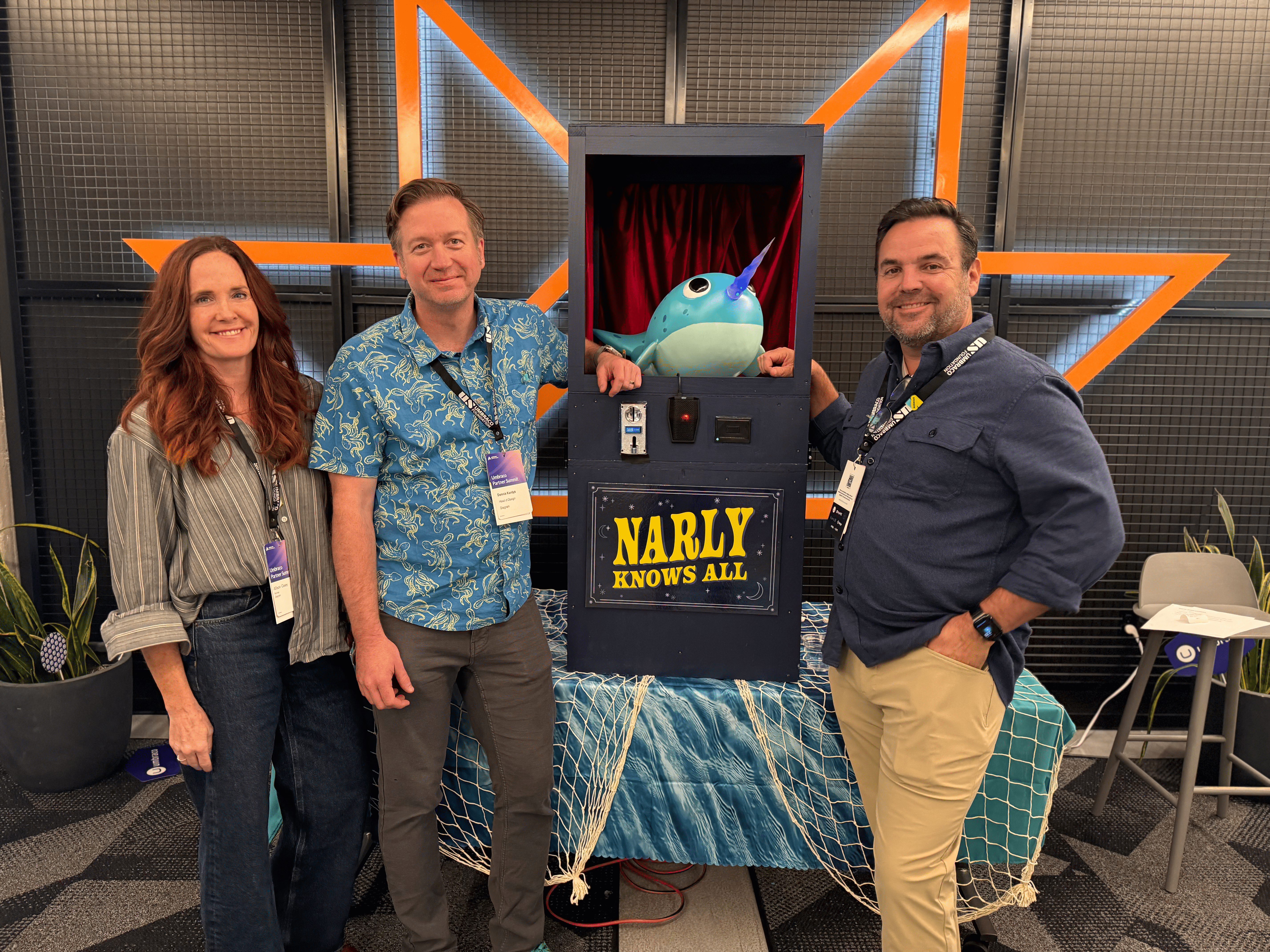

Diagram brought Umbraco’s mascot Narly to life as an AI-powered fortune-telling narwhal, blending tech, design, and play into a memorable physical experience.

October 14, 2025

6 minutes

Six months ago, if you’d asked us to predict the kinds of projects we’d be working on, “AI-enabled fortune-telling narwhal” probably wouldn’t have made the list. And yet, here we are!

So how did a mystical narwhal become the centerpiece of an interactive AI experience—and what did it teach us about designing with technology, people, and play in mind?

Project: Sponsorship Opportunity for Umbraco US Festival

Diagram volunteered to help sponsor the 2025 Umbraco US Festival, held October 2–3 at mHUB in Chicago. We signed on to sponsor the Spark Space, a large communal area outside the main stage room.

Typically, sponsors use these spaces to post signage and offer snacks or swag. This is nice, but not memorable. Inspired by Codegarden, we wanted to do something more outside the box.

The Umbraco community isn’t your typical tech crowd. It’s a mix of brilliant developers, designers, and digital strategists with a shared love for open-source creativity and a healthy appetite for absurdity. So we asked ourselves: how could we create something Umbraco-worthy—something fun, surprising, and conversation-starting?

That’s where Narly came in.

Introducing Narly

At the 2024 Umbraco Festival, conference organizers introduced Narly, an adorably cheerful narwhal mascot that quickly became a fan favorite. Stickers, balloons, cardboard cutouts—Narly was everywhere.

When we began planning for the 2025 Festival, Narly returned to mind. What if we could bring the mascot to life—not just as a symbol, but as something people could interact with?

We drew inspiration from old boardwalk fortune-telling machines like Zoltar from Big and imagined Narly as a mystical oracle: a whimsical, magical character with the power to reveal people’s fortunes.

Principle: Lead with People, Follow with Features

When new technology makes complex things easier, it’s tempting to start with the tool and look for an application. But a feature-first mindset can narrow your thinking.

Had we asked, “What can we do with AI?” we likely would’ve ended up with a generic demo. Instead, we focused on people: the conference-goers moving through the space, chatting between sessions, grabbing coffee.

We asked:

- What behaviors do we want to inspire?

- How can we create a shared experience that sparks conversation?

Once we landed on the concept of a mystical narwhal oracle, then we asked: “How can AI make this better?”

When AI Steps Out of the Browser

We wanted to make AI feel tangible by bringing it out of the chat window and into the real world as something people could see, hear, and interact with.

Fortune-telling Narly is a 2-foot 3D-printed narwhal with a glowing horn, housed in a blue cabinet inspired by those old boardwalk and amusement park machines. Custom tokens printed from recycled sea net were given to attendees. When they approached Narly, they could drop a token into a coin slot and ask a question—anything from “What’s the best pizza in Chicago?” to “Will I win the lottery?”

After a few mystical whale sounds and a flicker of light from the horn, a fortune printed out—short, witty, and entirely AI-generated. On the surface, it was a playful installation. Underneath, it was a live, fully orchestrated AI workflow.

How It Worked

- Coin drop: The reader detected the coin and activated a microphone.

- Voice capture: The user’s question was recorded and streamed.

- Transcription: Speech-to-text converted the question into text.

- Prompting: The text and context were sent to ChatGPT via API.

- Response generation: The model produced a fortune in Narly’s distinctive voice.

- Output: The thermal printer delivered the response on the spot.

Why Context Mattered

The real intelligence behind Narly came from its prompt. A model without context is generic. A model with rich system prompts can become a brand persona capable of voice, tone, humor, and nuance. These instructions defined who Narly was, how it should speak, and what lines it couldn’t cross.

Before the first question was asked, we established Narly’s tone, humor, and limits. The system prompt served as both script and guardrail. Here are some of the examples:

- Speak like a mystical sea oracle.

- Use light nautical language and the occasional Chicago sports reference.

- Keep responses under 30 words.

- Deflect sensitive or “best/worst” questions with clever metaphors.

These rules made the interaction consistent, safe, and kept Narly in character. More importantly, they illustrated how prompt design is a form of governance. The prompts can shape how an AI speaks and how it represents a brand in public.

What We Noticed

Narly was a hit. People lined up to test its humor, challenge its boundaries, or just see it in action. The reactions were delightful—surprise, laughter, curiosity, and selfies.

Sure, the technology was interesting, but what stood out most was the connection it created. Attendees felt like they were having a small, shared moment with each other, and with the character brought to life through AI. Seeing intelligence and personality embodied in a physical object made it feel approachable and real.

What the Experiment Taught Us About AI Strategy

-

AI Strategy Is About Orchestration

Narly worked because multiple systems—hardware sensors, live transcription, prompt design, and generative output—operated together. AI wasn’t the solution; it was one ingredient in the mix.

-

Learn by Doing: Prototyping Teaches Faster Than Planning

Because so much of this was new, we had to move quickly: build, test, iterate, repeat. Observing real user behavior taught us more than any upfront planning could.

-

The Line Between Digital and Physical Will Continue to Blur

As AI becomes embedded in hardware, wearables, and environmental systems, new opportunities and challenges will emerge for creating cross-channel experiences. Your content will need to move fluidly across devices and formats.

-

Physical Context Adds New Data

Sensors, microphones, and environmental cues provide real-time signals richer than form fills or dashboards. Blending those inputs with AI lets organizations design experiences that respond to what’s happening in the moment.

Lessons for Organizations Exploring AI

While Narly was playful, the lessons apply broadly:

- Start small, but connect the parts. Simple experiments that make systems talk to each other reveal the real challenges of data flow and timing.

- Use prompts as governance tools. Define tone, limits, and boundaries early to ensure consistent behavior in public contexts.

- Design for interaction, not just output. The value of AI often comes from how it adapts to users, not just what it produces.

- Treat experimentation as strategy. Building a working prototype can teach more about feasibility and integration than a lengthy planning phase.

Each takeaway points to the same truth: AI is moving beyond screens and dashboards. It’s becoming part of the environments, products, and moments where people live and work.

From Narwhals to New Interfaces

Narly didn’t predict anyone’s future, but it hinted at where AI is headed. The next generation of digital experiences won’t live only in browsers or apps—they’ll blend physical and digital interaction in ways that feel more human and spontaneous.

For teams exploring AI, the question isn’t if it will move beyond the screen, but how you’ll design for it when it does.